在 TensorFlow.org 上查看 在 TensorFlow.org 上查看

|

在 Google Colab 中运行 在 Google Colab 中运行

|

在 GitHub 上查看 在 GitHub 上查看

|

下载笔记本 下载笔记本

|

查看 TF Hub 模型 查看 TF Hub 模型

|

此 Colab 演示了如何使用通用句子编码器-精简版进行句子相似性任务。此模块与 通用句子编码器 非常相似,唯一的区别是您需要在输入句子上运行 SentencePiece 处理。

通用句子编码器使获取句子级嵌入变得像以前查找单个单词的嵌入一样容易。然后,句子嵌入可以轻松地用于计算句子级语义相似性,以及使用更少的监督训练数据来提高下游分类任务的性能。

入门

设置

# Install seaborn for pretty visualizationspip3 install --quiet seaborn# Install SentencePiece package# SentencePiece package is needed for Universal Sentence Encoder Lite. We'll# use it for all the text processing and sentence feature ID lookup.pip3 install --quiet sentencepiece

from absl import logging

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import tensorflow_hub as hub

import sentencepiece as spm

import matplotlib.pyplot as plt

import numpy as np

import os

import pandas as pd

import re

import seaborn as sns

2023-12-08 13:03:51.535911: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-12-08 13:03:51.535969: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-12-08 13:03:51.537485: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/compat/v2_compat.py:108: disable_resource_variables (from tensorflow.python.ops.variable_scope) is deprecated and will be removed in a future version. Instructions for updating: non-resource variables are not supported in the long term

从 TF-Hub 加载模块

module = hub.Module("https://tfhub.dev/google/universal-sentence-encoder-lite/2")

2023-12-08 13:03:56.973158: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:274] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

input_placeholder = tf.sparse_placeholder(tf.int64, shape=[None, None])

encodings = module(

inputs=dict(

values=input_placeholder.values,

indices=input_placeholder.indices,

dense_shape=input_placeholder.dense_shape))

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore

从 TF-Hub 模块加载 SentencePiece 模型

SentencePiece 模型方便地存储在模块的资产中。必须加载它才能初始化处理器。

with tf.Session() as sess:

spm_path = sess.run(module(signature="spm_path"))

sp = spm.SentencePieceProcessor()

with tf.io.gfile.GFile(spm_path, mode="rb") as f:

sp.LoadFromSerializedProto(f.read())

print("SentencePiece model loaded at {}.".format(spm_path))

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore SentencePiece model loaded at b'/tmpfs/tmp/tfhub_modules/539544f0a997d91c327c23285ea00c37588d92cc/assets/universal_encoder_8k_spm.model'.

def process_to_IDs_in_sparse_format(sp, sentences):

# An utility method that processes sentences with the sentence piece processor

# 'sp' and returns the results in tf.SparseTensor-similar format:

# (values, indices, dense_shape)

ids = [sp.EncodeAsIds(x) for x in sentences]

max_len = max(len(x) for x in ids)

dense_shape=(len(ids), max_len)

values=[item for sublist in ids for item in sublist]

indices=[[row,col] for row in range(len(ids)) for col in range(len(ids[row]))]

return (values, indices, dense_shape)

使用几个示例测试模块

# Compute a representation for each message, showing various lengths supported.

word = "Elephant"

sentence = "I am a sentence for which I would like to get its embedding."

paragraph = (

"Universal Sentence Encoder embeddings also support short paragraphs. "

"There is no hard limit on how long the paragraph is. Roughly, the longer "

"the more 'diluted' the embedding will be.")

messages = [word, sentence, paragraph]

values, indices, dense_shape = process_to_IDs_in_sparse_format(sp, messages)

# Reduce logging output.

logging.set_verbosity(logging.ERROR)

with tf.Session() as session:

session.run([tf.global_variables_initializer(), tf.tables_initializer()])

message_embeddings = session.run(

encodings,

feed_dict={input_placeholder.values: values,

input_placeholder.indices: indices,

input_placeholder.dense_shape: dense_shape})

for i, message_embedding in enumerate(np.array(message_embeddings).tolist()):

print("Message: {}".format(messages[i]))

print("Embedding size: {}".format(len(message_embedding)))

message_embedding_snippet = ", ".join(

(str(x) for x in message_embedding[:3]))

print("Embedding: [{}, ...]\n".format(message_embedding_snippet))

Message: Elephant Embedding size: 512 Embedding: [0.053387485444545746, 0.05319438502192497, -0.05235603451728821, ...] Message: I am a sentence for which I would like to get its embedding. Embedding size: 512 Embedding: [0.03533291816711426, -0.047149673104286194, 0.012305588461458683, ...] Message: Universal Sentence Encoder embeddings also support short paragraphs. There is no hard limit on how long the paragraph is. Roughly, the longer the more 'diluted' the embedding will be. Embedding size: 512 Embedding: [-0.004081661347299814, -0.08954867720603943, 0.03737194463610649, ...]

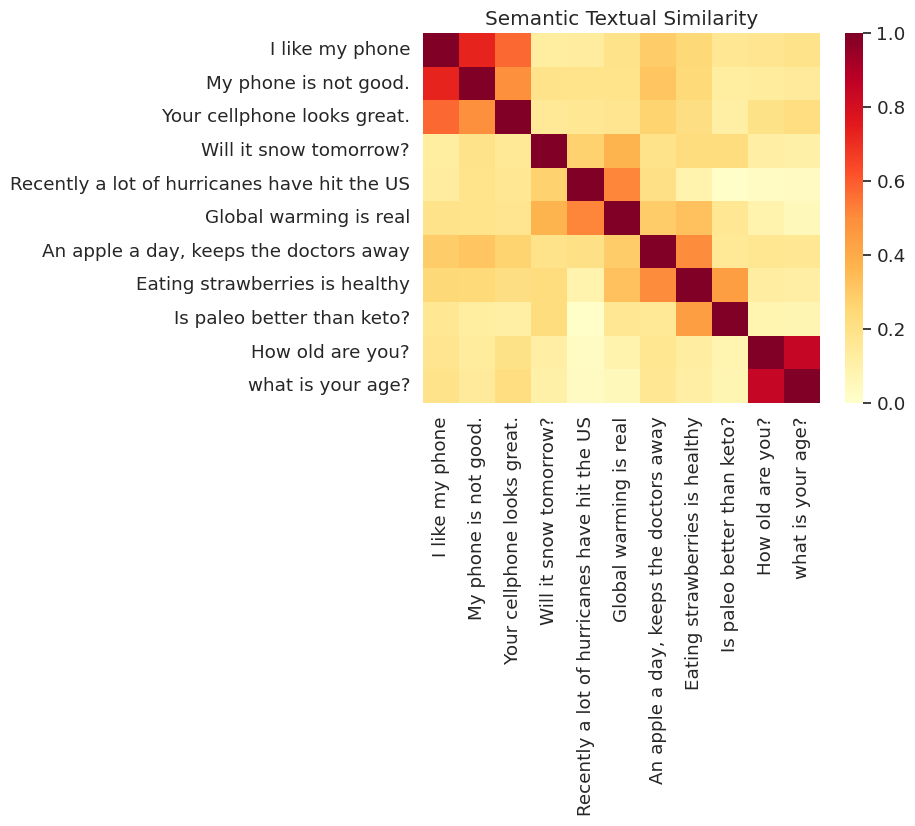

语义文本相似性 (STS) 任务示例

通用句子编码器生成的嵌入是近似归一化的。两个句子的语义相似性可以轻松地计算为编码的内积。

def plot_similarity(labels, features, rotation):

corr = np.inner(features, features)

sns.set(font_scale=1.2)

g = sns.heatmap(

corr,

xticklabels=labels,

yticklabels=labels,

vmin=0,

vmax=1,

cmap="YlOrRd")

g.set_xticklabels(labels, rotation=rotation)

g.set_title("Semantic Textual Similarity")

def run_and_plot(session, input_placeholder, messages):

values, indices, dense_shape = process_to_IDs_in_sparse_format(sp,messages)

message_embeddings = session.run(

encodings,

feed_dict={input_placeholder.values: values,

input_placeholder.indices: indices,

input_placeholder.dense_shape: dense_shape})

plot_similarity(messages, message_embeddings, 90)

可视化相似性

在这里,我们以热图的形式显示相似性。最终图是一个 9x9 矩阵,其中每个条目 [i, j] 的颜色基于句子 i 和 j 的编码的内积。

messages = [

# Smartphones

"I like my phone",

"My phone is not good.",

"Your cellphone looks great.",

# Weather

"Will it snow tomorrow?",

"Recently a lot of hurricanes have hit the US",

"Global warming is real",

# Food and health

"An apple a day, keeps the doctors away",

"Eating strawberries is healthy",

"Is paleo better than keto?",

# Asking about age

"How old are you?",

"what is your age?",

]

with tf.Session() as session:

session.run(tf.global_variables_initializer())

session.run(tf.tables_initializer())

run_and_plot(session, input_placeholder, messages)

评估:STS(语义文本相似性)基准

该 STS 基准 提供了对使用句子嵌入计算的相似性分数与人类判断一致程度的内在评估。该基准要求系统为各种句子对返回相似性分数。然后使用 皮尔逊相关系数 来评估机器相似性分数与人类判断的质量。

下载数据

import pandas

import scipy

import math

def load_sts_dataset(filename):

# Loads a subset of the STS dataset into a DataFrame. In particular both

# sentences and their human rated similarity score.

sent_pairs = []

with tf.gfile.GFile(filename, "r") as f:

for line in f:

ts = line.strip().split("\t")

# (sent_1, sent_2, similarity_score)

sent_pairs.append((ts[5], ts[6], float(ts[4])))

return pandas.DataFrame(sent_pairs, columns=["sent_1", "sent_2", "sim"])

def download_and_load_sts_data():

sts_dataset = tf.keras.utils.get_file(

fname="Stsbenchmark.tar.gz",

origin="http://ixa2.si.ehu.es/stswiki/images/4/48/Stsbenchmark.tar.gz",

extract=True)

sts_dev = load_sts_dataset(

os.path.join(os.path.dirname(sts_dataset), "stsbenchmark", "sts-dev.csv"))

sts_test = load_sts_dataset(

os.path.join(

os.path.dirname(sts_dataset), "stsbenchmark", "sts-test.csv"))

return sts_dev, sts_test

sts_dev, sts_test = download_and_load_sts_data()

Downloading data from http://ixa2.si.ehu.es/stswiki/images/4/48/Stsbenchmark.tar.gz 409630/409630 [==============================] - 1s 2us/step

构建评估图

sts_input1 = tf.sparse_placeholder(tf.int64, shape=(None, None))

sts_input2 = tf.sparse_placeholder(tf.int64, shape=(None, None))

# For evaluation we use exactly normalized rather than

# approximately normalized.

sts_encode1 = tf.nn.l2_normalize(

module(

inputs=dict(values=sts_input1.values,

indices=sts_input1.indices,

dense_shape=sts_input1.dense_shape)),

axis=1)

sts_encode2 = tf.nn.l2_normalize(

module(

inputs=dict(values=sts_input2.values,

indices=sts_input2.indices,

dense_shape=sts_input2.dense_shape)),

axis=1)

sim_scores = -tf.acos(tf.reduce_sum(tf.multiply(sts_encode1, sts_encode2), axis=1))

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore

评估句子嵌入

选择基准数据集

def run_sts_benchmark(session):

"""Returns the similarity scores"""

scores = session.run(

sim_scores,

feed_dict={

sts_input1.values: values1,

sts_input1.indices: indices1,

sts_input1.dense_shape: dense_shape1,

sts_input2.values: values2,

sts_input2.indices: indices2,

sts_input2.dense_shape: dense_shape2,

})

return scores

with tf.Session() as session:

session.run(tf.global_variables_initializer())

session.run(tf.tables_initializer())

scores = run_sts_benchmark(session)

pearson_correlation = scipy.stats.pearsonr(scores, similarity_scores)

print('Pearson correlation coefficient = {0}\np-value = {1}'.format(

pearson_correlation[0], pearson_correlation[1]))

Pearson correlation coefficient = 0.7856484921513689 p-value = 1.0657791693e-314