在 TensorFlow.org 上查看 在 TensorFlow.org 上查看

|

在 Google Colab 中运行 在 Google Colab 中运行

|

在 GitHub 上查看 在 GitHub 上查看

|

下载笔记本 下载笔记本

|

查看 TF Hub 模型 查看 TF Hub 模型

|

YAMNet 是一个预训练的深度神经网络,可以从 521 个类别 中预测音频事件,例如笑声、犬吠或警笛声。

在本教程中,您将学习如何

- 加载并使用 YAMNet 模型进行推理。

- 使用 YAMNet 嵌入构建一个新的模型来对猫和狗的声音进行分类。

- 评估和导出您的模型。

导入 TensorFlow 和其他库

首先安装 TensorFlow I/O,这将使您更容易从磁盘加载音频文件。

pip install -q "tensorflow==2.11.*"# tensorflow_io 0.28 is compatible with TensorFlow 2.11pip install -q "tensorflow_io==0.28.*"

import os

from IPython import display

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import tensorflow as tf

import tensorflow_hub as hub

import tensorflow_io as tfio

2024-07-13 06:10:49.836959: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory 2024-07-13 06:10:50.499328: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory 2024-07-13 06:10:50.499422: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory 2024-07-13 06:10:50.499432: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

关于 YAMNet

YAMNet 是一个预训练的神经网络,它采用 MobileNetV1 深度可分离卷积架构。它可以使用音频波形作为输入,并对来自 AudioSet 语料库的 521 个音频事件中的每一个进行独立预测。

在内部,模型从音频信号中提取“帧”,并处理这些帧的批次。此版本的模型使用 0.96 秒长的帧,每 0.48 秒提取一帧。

模型接受一个包含任意长度波形的 1 维 float32 张量或 NumPy 数组,表示为单通道(单声道)16 kHz 采样,范围在 [-1.0, +1.0] 之间。本教程包含代码,可帮助您将 WAV 文件转换为支持的格式。

模型返回 3 个输出,包括类别分数、嵌入(您将用于迁移学习)和对数梅尔 频谱图。您可以在 此处 找到更多详细信息。

YAMNet 的一个特定用途是作为高级特征提取器 - 1,024 维嵌入输出。您将使用基础(YAMNet)模型的输入特征,并将它们馈送到包含一个隐藏 tf.keras.layers.Dense 层的较浅模型中。然后,您将在少量数据上训练网络进行音频分类,无需大量标记数据和端到端训练。(这类似于 使用 TensorFlow Hub 进行图像分类的迁移学习,了解更多信息。)

首先,您将测试模型并查看音频分类结果。然后,您将构建数据预处理管道。

从 TensorFlow Hub 加载 YAMNet

您将使用来自 Tensorflow Hub 的预训练 YAMNet 从声音文件中提取嵌入。

从 TensorFlow Hub 加载模型很简单:选择模型,复制其 URL,并使用 load 函数。

yamnet_model_handle = 'https://tfhub.dev/google/yamnet/1'

yamnet_model = hub.load(yamnet_model_handle)

2024-07-13 06:10:52.598675: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory 2024-07-13 06:10:52.598775: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcublas.so.11'; dlerror: libcublas.so.11: cannot open shared object file: No such file or directory 2024-07-13 06:10:52.598838: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcublasLt.so.11'; dlerror: libcublasLt.so.11: cannot open shared object file: No such file or directory 2024-07-13 06:10:52.598897: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcufft.so.10'; dlerror: libcufft.so.10: cannot open shared object file: No such file or directory 2024-07-13 06:10:52.655465: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcusparse.so.11'; dlerror: libcusparse.so.11: cannot open shared object file: No such file or directory 2024-07-13 06:10:52.655667: W tensorflow/core/common_runtime/gpu/gpu_device.cc:1934] Cannot dlopen some GPU libraries. Please make sure the missing libraries mentioned above are installed properly if you would like to use GPU. Follow the guide at https://tensorflowcn.cn/install/gpu for how to download and setup the required libraries for your platform. Skipping registering GPU devices...

加载完模型后,您可以按照 YAMNet 基本使用教程 下载示例 WAV 文件以运行推理。

testing_wav_file_name = tf.keras.utils.get_file('miaow_16k.wav',

'https://storage.googleapis.com/audioset/miaow_16k.wav',

cache_dir='./',

cache_subdir='test_data')

print(testing_wav_file_name)

Downloading data from https://storage.googleapis.com/audioset/miaow_16k.wav 215546/215546 [==============================] - 0s 0us/step ./test_data/miaow_16k.wav

您将需要一个函数来加载音频文件,该函数稍后在处理训练数据时也会使用。(在 简单音频识别 中了解有关读取音频文件及其标签的更多信息。)

# Utility functions for loading audio files and making sure the sample rate is correct.

@tf.function

def load_wav_16k_mono(filename):

""" Load a WAV file, convert it to a float tensor, resample to 16 kHz single-channel audio. """

file_contents = tf.io.read_file(filename)

wav, sample_rate = tf.audio.decode_wav(

file_contents,

desired_channels=1)

wav = tf.squeeze(wav, axis=-1)

sample_rate = tf.cast(sample_rate, dtype=tf.int64)

wav = tfio.audio.resample(wav, rate_in=sample_rate, rate_out=16000)

return wav

testing_wav_data = load_wav_16k_mono(testing_wav_file_name)

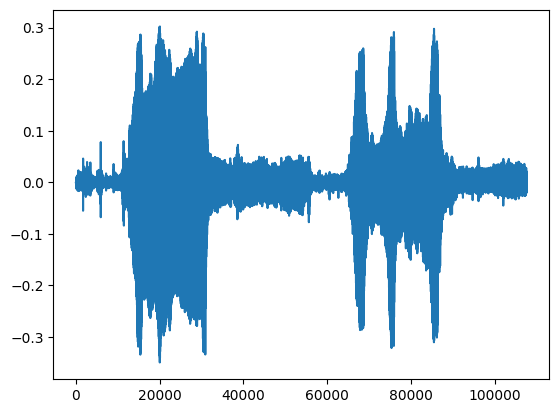

_ = plt.plot(testing_wav_data)

# Play the audio file.

display.Audio(testing_wav_data, rate=16000)

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/autograph/pyct/static_analysis/liveness.py:83: Analyzer.lamba_check (from tensorflow.python.autograph.pyct.static_analysis.liveness) is deprecated and will be removed after 2023-09-23. Instructions for updating: Lambda fuctions will be no more assumed to be used in the statement where they are used, or at least in the same block. https://github.com/tensorflow/tensorflow/issues/56089 WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/autograph/pyct/static_analysis/liveness.py:83: Analyzer.lamba_check (from tensorflow.python.autograph.pyct.static_analysis.liveness) is deprecated and will be removed after 2023-09-23. Instructions for updating: Lambda fuctions will be no more assumed to be used in the statement where they are used, or at least in the same block. https://github.com/tensorflow/tensorflow/issues/56089 WARNING:tensorflow:Using a while_loop for converting IO>AudioResample cause there is no registered converter for this op. WARNING:tensorflow:Using a while_loop for converting IO>AudioResample cause there is no registered converter for this op.

加载类别映射

加载 YAMNet 能够识别的类别名称非常重要。映射文件位于 yamnet_model.class_map_path() 中,以 CSV 格式提供。

class_map_path = yamnet_model.class_map_path().numpy().decode('utf-8')

class_names =list(pd.read_csv(class_map_path)['display_name'])

for name in class_names[:20]:

print(name)

print('...')

Speech Child speech, kid speaking Conversation Narration, monologue Babbling Speech synthesizer Shout Bellow Whoop Yell Children shouting Screaming Whispering Laughter Baby laughter Giggle Snicker Belly laugh Chuckle, chortle Crying, sobbing ...

运行推理

YAMNet 提供帧级类别分数(即,每帧 521 个分数)。为了确定剪辑级预测,可以跨帧对每个类别进行分数聚合(例如,使用平均或最大聚合)。这在下面由 scores_np.mean(axis=0) 完成。最后,要找到剪辑级中得分最高的类别,您需要获取 521 个聚合分数中的最大值。

scores, embeddings, spectrogram = yamnet_model(testing_wav_data)

class_scores = tf.reduce_mean(scores, axis=0)

top_class = tf.math.argmax(class_scores)

inferred_class = class_names[top_class]

print(f'The main sound is: {inferred_class}')

print(f'The embeddings shape: {embeddings.shape}')

The main sound is: Animal The embeddings shape: (13, 1024)

ESC-50 数据集

ESC-50 数据集 (Piczak,2015) 是一个包含 2,000 个五秒长环境音频记录的标记集合。该数据集包含 50 个类别,每个类别有 40 个示例。

下载数据集并解压缩。

_ = tf.keras.utils.get_file('esc-50.zip',

'https://github.com/karoldvl/ESC-50/archive/master.zip',

cache_dir='./',

cache_subdir='datasets',

extract=True)

Downloading data from https://github.com/karoldvl/ESC-50/archive/master.zip 8192/Unknown - 0s 0us/step

探索数据

每个文件的元数据在 ./datasets/ESC-50-master/meta/esc50.csv 中的 csv 文件中指定

所有音频文件都在 ./datasets/ESC-50-master/audio/ 中

您将创建一个包含映射的 pandas DataFrame,并使用它来更清晰地查看数据。

esc50_csv = './datasets/ESC-50-master/meta/esc50.csv'

base_data_path = './datasets/ESC-50-master/audio/'

pd_data = pd.read_csv(esc50_csv)

pd_data.head()

过滤数据

现在数据已存储在 DataFrame 中,请应用一些转换

- 过滤掉行,仅使用选定的类别 -

dog和cat。如果您想使用任何其他类别,您可以在此处选择它们。 - 修改文件名以包含完整路径。这将使以后加载更容易。

- 更改目标以在特定范围内。在本例中,

dog将保留为0,但cat将变为1,而不是其原始值5。

my_classes = ['dog', 'cat']

map_class_to_id = {'dog':0, 'cat':1}

filtered_pd = pd_data[pd_data.category.isin(my_classes)]

class_id = filtered_pd['category'].apply(lambda name: map_class_to_id[name])

filtered_pd = filtered_pd.assign(target=class_id)

full_path = filtered_pd['filename'].apply(lambda row: os.path.join(base_data_path, row))

filtered_pd = filtered_pd.assign(filename=full_path)

filtered_pd.head(10)

加载音频文件并检索嵌入

在这里,您将应用 load_wav_16k_mono 并为模型准备 WAV 数据。

从 WAV 数据中提取嵌入时,您将获得一个形状为 (N, 1024) 的数组,其中 N 是 YAMNet 找到的帧数(每 0.48 秒音频一个)。

您的模型将使用每个帧作为输入。因此,您需要创建一个新列,该列每行包含一个帧。您还需要扩展标签和 fold 列以正确反映这些新行。

扩展的 fold 列保留原始值。您不能混合帧,因为在执行拆分时,您最终可能会在不同的拆分中拥有相同音频的一部分,这将使您的验证和测试步骤效率降低。

filenames = filtered_pd['filename']

targets = filtered_pd['target']

folds = filtered_pd['fold']

main_ds = tf.data.Dataset.from_tensor_slices((filenames, targets, folds))

main_ds.element_spec

(TensorSpec(shape=(), dtype=tf.string, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None))

def load_wav_for_map(filename, label, fold):

return load_wav_16k_mono(filename), label, fold

main_ds = main_ds.map(load_wav_for_map)

main_ds.element_spec

WARNING:tensorflow:Using a while_loop for converting IO>AudioResample cause there is no registered converter for this op. WARNING:tensorflow:Using a while_loop for converting IO>AudioResample cause there is no registered converter for this op. (TensorSpec(shape=<unknown>, dtype=tf.float32, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None))

# applies the embedding extraction model to a wav data

def extract_embedding(wav_data, label, fold):

''' run YAMNet to extract embedding from the wav data '''

scores, embeddings, spectrogram = yamnet_model(wav_data)

num_embeddings = tf.shape(embeddings)[0]

return (embeddings,

tf.repeat(label, num_embeddings),

tf.repeat(fold, num_embeddings))

# extract embedding

main_ds = main_ds.map(extract_embedding).unbatch()

main_ds.element_spec

(TensorSpec(shape=(1024,), dtype=tf.float32, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None), TensorSpec(shape=(), dtype=tf.int64, name=None))

拆分数据

您将使用 fold 列将数据集拆分为训练集、验证集和测试集。

ESC-50 被安排成五个大小相同的交叉验证 fold,这样来自相同原始来源的剪辑始终在同一个 fold 中 - 在 ESC:环境声音分类数据集 论文中了解更多信息。

最后一步是从数据集中删除 fold 列,因为您在训练期间不会使用它。

cached_ds = main_ds.cache()

train_ds = cached_ds.filter(lambda embedding, label, fold: fold < 4)

val_ds = cached_ds.filter(lambda embedding, label, fold: fold == 4)

test_ds = cached_ds.filter(lambda embedding, label, fold: fold == 5)

# remove the folds column now that it's not needed anymore

remove_fold_column = lambda embedding, label, fold: (embedding, label)

train_ds = train_ds.map(remove_fold_column)

val_ds = val_ds.map(remove_fold_column)

test_ds = test_ds.map(remove_fold_column)

train_ds = train_ds.cache().shuffle(1000).batch(32).prefetch(tf.data.AUTOTUNE)

val_ds = val_ds.cache().batch(32).prefetch(tf.data.AUTOTUNE)

test_ds = test_ds.cache().batch(32).prefetch(tf.data.AUTOTUNE)

创建您的模型

您已经完成了大部分工作!接下来,定义一个非常简单的 Sequential 模型,该模型具有一个隐藏层和两个输出,用于从声音中识别猫和狗。

my_model = tf.keras.Sequential([

tf.keras.layers.Input(shape=(1024), dtype=tf.float32,

name='input_embedding'),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(len(my_classes))

], name='my_model')

my_model.summary()

Model: "my_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 512) 524800

dense_1 (Dense) (None, 2) 1026

=================================================================

Total params: 525,826

Trainable params: 525,826

Non-trainable params: 0

_________________________________________________________________

my_model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer="adam",

metrics=['accuracy'])

callback = tf.keras.callbacks.EarlyStopping(monitor='loss',

patience=3,

restore_best_weights=True)

history = my_model.fit(train_ds,

epochs=20,

validation_data=val_ds,

callbacks=callback)

Epoch 1/20 15/15 [==============================] - 5s 42ms/step - loss: 1.3241 - accuracy: 0.8292 - val_loss: 0.8957 - val_accuracy: 0.8750 Epoch 2/20 15/15 [==============================] - 0s 5ms/step - loss: 0.4086 - accuracy: 0.8896 - val_loss: 0.4948 - val_accuracy: 0.8813 Epoch 3/20 15/15 [==============================] - 0s 5ms/step - loss: 0.5242 - accuracy: 0.8938 - val_loss: 0.8153 - val_accuracy: 0.8750 Epoch 4/20 15/15 [==============================] - 0s 5ms/step - loss: 0.3278 - accuracy: 0.9083 - val_loss: 0.2061 - val_accuracy: 0.9125 Epoch 5/20 15/15 [==============================] - 0s 5ms/step - loss: 0.2723 - accuracy: 0.9250 - val_loss: 0.3267 - val_accuracy: 0.8813 Epoch 6/20 15/15 [==============================] - 0s 4ms/step - loss: 0.2786 - accuracy: 0.9250 - val_loss: 0.2293 - val_accuracy: 0.9000 Epoch 7/20 15/15 [==============================] - 0s 4ms/step - loss: 0.2491 - accuracy: 0.9187 - val_loss: 0.2192 - val_accuracy: 0.8875 Epoch 8/20 15/15 [==============================] - 0s 4ms/step - loss: 0.1690 - accuracy: 0.9208 - val_loss: 0.2091 - val_accuracy: 0.9187 Epoch 9/20 15/15 [==============================] - 0s 4ms/step - loss: 0.1772 - accuracy: 0.9187 - val_loss: 0.2278 - val_accuracy: 0.9187 Epoch 10/20 15/15 [==============================] - 0s 4ms/step - loss: 0.2451 - accuracy: 0.9375 - val_loss: 0.5393 - val_accuracy: 0.8813 Epoch 11/20 15/15 [==============================] - 0s 4ms/step - loss: 0.1557 - accuracy: 0.9250 - val_loss: 0.3100 - val_accuracy: 0.8813 Epoch 12/20 15/15 [==============================] - 0s 4ms/step - loss: 0.1612 - accuracy: 0.9292 - val_loss: 0.6557 - val_accuracy: 0.8813 Epoch 13/20 15/15 [==============================] - 0s 4ms/step - loss: 0.3020 - accuracy: 0.9271 - val_loss: 0.2331 - val_accuracy: 0.9062 Epoch 14/20 15/15 [==============================] - 0s 5ms/step - loss: 0.4202 - accuracy: 0.9271 - val_loss: 0.5393 - val_accuracy: 0.9187

让我们在测试数据上运行 evaluate 方法,以确保没有过拟合。

loss, accuracy = my_model.evaluate(test_ds)

print("Loss: ", loss)

print("Accuracy: ", accuracy)

5/5 [==============================] - 0s 5ms/step - loss: 0.4244 - accuracy: 0.8250 Loss: 0.4244455397129059 Accuracy: 0.824999988079071

您做到了!

测试您的模型

接下来,尝试使用仅 YAMNet 的先前测试中的嵌入来测试您的模型。

scores, embeddings, spectrogram = yamnet_model(testing_wav_data)

result = my_model(embeddings).numpy()

inferred_class = my_classes[result.mean(axis=0).argmax()]

print(f'The main sound is: {inferred_class}')

The main sound is: cat

保存一个可以直接将 WAV 文件作为输入的模型

当您将嵌入作为输入提供给模型时,您的模型可以正常工作。

在现实世界场景中,您希望使用音频数据作为直接输入。

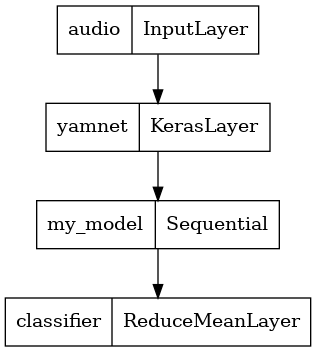

为此,您将 YAMNet 与您的模型合并为一个模型,您可以将其导出以用于其他应用程序。

为了便于使用模型的结果,最后一层将是 reduce_mean 操作。在将此模型用于服务时(您将在本教程的后面部分了解),您将需要最后一层的名称。如果您没有定义,TensorFlow 将自动定义一个递增的名称,这使得测试变得困难,因为它会在每次训练模型时不断变化。在使用原始 TensorFlow 操作时,您无法为其分配名称。为了解决此问题,您将创建一个应用 reduce_mean 的自定义层,并将其命名为 'classifier'。

class ReduceMeanLayer(tf.keras.layers.Layer):

def __init__(self, axis=0, **kwargs):

super(ReduceMeanLayer, self).__init__(**kwargs)

self.axis = axis

def call(self, input):

return tf.math.reduce_mean(input, axis=self.axis)

saved_model_path = './dogs_and_cats_yamnet'

input_segment = tf.keras.layers.Input(shape=(), dtype=tf.float32, name='audio')

embedding_extraction_layer = hub.KerasLayer(yamnet_model_handle,

trainable=False, name='yamnet')

_, embeddings_output, _ = embedding_extraction_layer(input_segment)

serving_outputs = my_model(embeddings_output)

serving_outputs = ReduceMeanLayer(axis=0, name='classifier')(serving_outputs)

serving_model = tf.keras.Model(input_segment, serving_outputs)

serving_model.save(saved_model_path, include_optimizer=False)

WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:tensorflow:Compiled the loaded model, but the compiled metrics have yet to be built. `model.compile_metrics` will be empty until you train or evaluate the model. WARNING:absl:Found untraced functions such as _update_step_xla while saving (showing 1 of 1). These functions will not be directly callable after loading. INFO:tensorflow:Assets written to: ./dogs_and_cats_yamnet/assets INFO:tensorflow:Assets written to: ./dogs_and_cats_yamnet/assets

tf.keras.utils.plot_model(serving_model)

加载您保存的模型以验证它是否按预期工作。

reloaded_model = tf.saved_model.load(saved_model_path)

最后测试:给定一些声音数据,您的模型是否返回正确的结果?

reloaded_results = reloaded_model(testing_wav_data)

cat_or_dog = my_classes[tf.math.argmax(reloaded_results)]

print(f'The main sound is: {cat_or_dog}')

The main sound is: cat

如果您想在服务设置中尝试您的新模型,可以使用 'serving_default' 签名。

serving_results = reloaded_model.signatures['serving_default'](testing_wav_data)

cat_or_dog = my_classes[tf.math.argmax(serving_results['classifier'])]

print(f'The main sound is: {cat_or_dog}')

The main sound is: cat

(可选)更多测试

模型已准备就绪。

让我们将其与测试数据集上的 YAMNet 进行比较。

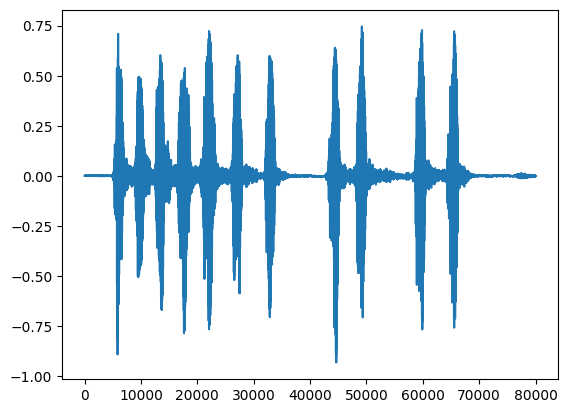

test_pd = filtered_pd.loc[filtered_pd['fold'] == 5]

row = test_pd.sample(1)

filename = row['filename'].item()

print(filename)

waveform = load_wav_16k_mono(filename)

print(f'Waveform values: {waveform}')

_ = plt.plot(waveform)

display.Audio(waveform, rate=16000)

./datasets/ESC-50-master/audio/5-212454-A-0.wav WARNING:tensorflow:Using a while_loop for converting IO>AudioResample cause there is no registered converter for this op. WARNING:tensorflow:Using a while_loop for converting IO>AudioResample cause there is no registered converter for this op. Waveform values: [-8.8849301e-09 2.6603255e-08 -1.1731625e-08 ... -1.3478296e-03 -1.0509168e-03 -9.1038318e-04]

# Run the model, check the output.

scores, embeddings, spectrogram = yamnet_model(waveform)

class_scores = tf.reduce_mean(scores, axis=0)

top_class = tf.math.argmax(class_scores)

inferred_class = class_names[top_class]

top_score = class_scores[top_class]

print(f'[YAMNet] The main sound is: {inferred_class} ({top_score})')

reloaded_results = reloaded_model(waveform)

your_top_class = tf.math.argmax(reloaded_results)

your_inferred_class = my_classes[your_top_class]

class_probabilities = tf.nn.softmax(reloaded_results, axis=-1)

your_top_score = class_probabilities[your_top_class]

print(f'[Your model] The main sound is: {your_inferred_class} ({your_top_score})')

[YAMNet] The main sound is: Animal (0.9570279121398926) [Your model] The main sound is: dog (0.9999891519546509)

下一步

您已经创建了一个可以对狗或猫的声音进行分类的模型。使用相同的想法和不同的数据集,您可以尝试例如构建一个基于鸟类鸣叫的 鸟类声学识别器。

在社交媒体上与 TensorFlow 团队分享您的项目!