在 TensorFlow.org 上查看 在 TensorFlow.org 上查看

|

在 Google Colab 中运行 在 Google Colab 中运行

|

在 GitHub 上查看 在 GitHub 上查看

|

下载笔记本 下载笔记本

|

欢迎来到 TensorFlow 决策森林 (TF-DF) 的提升模型教程。在本教程中,您将学习什么是提升模型,为什么它如此重要,以及如何在 TF-DF 中使用它。

本教程假设您熟悉 TF-DF 的基础知识,特别是安装过程。初学者教程 是开始学习 TF-DF 的绝佳起点。

在本 colab 中,您将

- 了解什么是提升模型。

- 在Hillstrom 电子邮件营销数据集上训练提升随机森林模型。

- 评估此模型的质量。

安装 TensorFlow 决策森林

通过运行以下单元格来安装 TF-DF。

Wurlitzer 可以在 Colabs 中显示详细的训练日志(当在模型构造函数中使用 verbose=2 时)。

pip install tensorflow_decision_forests wurlitzer

导入库

import tensorflow_decision_forests as tfdf

import os

import numpy as np

import pandas as pd

import tensorflow as tf

import math

import matplotlib.pyplot as plt

隐藏的代码单元格限制了 colab 中的输出高度。

# Check the version of TensorFlow Decision Forests

print("Found TensorFlow Decision Forests v" + tfdf.__version__)

Found TensorFlow Decision Forests v1.9.0

什么是提升模型?

提升模型 是一种统计建模技术,用于预测对主题采取行动的增量影响。该行动通常被称为处理,可以应用也可以不应用。

提升模型通常用于目标营销活动,以预测一个人基于其收到的营销曝光而进行购买(或任何其他期望的行动)的可能性增加。

例如,提升模型可以预测电子邮件的效果。效果定义为条件概率 \begin{align} \text{effect}(\text{email}) = &\Pr(\text{outcome}=\text{purchase}\ \vert\ \text{treatment}=\text{with email})\ &- \Pr(\text{outcome}=\text{purchase} \ \vert\ \text{treatment}=\text{no email}), \end{align} 其中 \(\Pr(\text{outcome}=\text{purchase}\ \vert\ ...)\) 是根据是否收到电子邮件而进行购买的概率。

将其与分类模型进行比较:使用分类模型,可以预测购买的概率。但是,即使没有收到电子邮件,具有高概率的客户也可能在商店中花钱。

类似地,可以使用数值提升来预测收到电子邮件时支出增加的数值。相比之下,回归模型只能增加预期支出,这在许多情况下是一个不太有用的指标。

在 TF-DF 中定义提升模型

TF-DF 期望提升数据集以“扁平”格式呈现。客户数据集可能如下所示

| 处理 | 结果 | 特征_1 | 特征_2 |

|---|---|---|---|

| 0 | 1 | 0.1 | 蓝色 |

| 0 | 0 | 0.2 | 蓝色 |

| 1 | 1 | 0.3 | 蓝色 |

| 1 | 1 | 0.4 | 蓝色 |

处理是一个二元变量,指示示例是否已收到处理。在上面的示例中,处理指示客户是否已收到电子邮件。结果(标签)指示示例在收到处理(或未收到处理)后的状态。TF-DF 支持用于分类提升的分类结果和用于数值提升的数值结果。

训练提升模型

在本示例中,我们将使用Hillstrom 电子邮件营销数据集。

此数据集包含 64,000 名在过去 12 个月内最后一次购买的客户。这些客户参与了电子邮件测试

- 1/3 随机选择接收以男士商品为特色的电子邮件活动。

- 1/3 随机选择接收以女士商品为特色的电子邮件活动。

- 1/3 随机选择不接收电子邮件活动。

在电子邮件活动后的两周内,跟踪了结果。任务是判断男士或女士电子邮件活动是否成功。

阅读有关数据集的更多信息 在其文档中。本教程使用由 TensorFlow 数据集 整理的数据集。

# Install the TensorFlow Datasets packagepip install tensorflow-datasets -U --quiet

# Load the dataset

import tensorflow_datasets as tfds

raw_train, raw_test = tfds.load('hillstrom', split=['train[:80%]', 'train[20%:]'])

# Display the first 10 examples of the test fold.

pd.DataFrame(list(raw_test.batch(10).take(1))[0])

2024-04-20 11:22:09.063782: W tensorflow/core/kernels/data/cache_dataset_ops.cc:858] The calling iterator did not fully read the dataset being cached. In order to avoid unexpected truncation of the dataset, the partially cached contents of the dataset will be discarded. This can happen if you have an input pipeline similar to `dataset.cache().take(k).repeat()`. You should use `dataset.take(k).cache().repeat()` instead. 2024-04-20 11:22:09.069098: W tensorflow/core/framework/local_rendezvous.cc:404] Local rendezvous is aborting with status: OUT_OF_RANGE: End of sequence

数据集预处理

由于 TF-DF 目前仅支持二元处理,因此将“男士电子邮件”和“女士电子邮件”活动合并。本教程使用二元变量 conversion 作为结果。这意味着问题是一个分类提升问题。如果我们使用数值变量 spend,问题将是一个数值提升问题。

def prepare_dataset(example):

# Use a binary treatment class.

example['treatment'] = 1 if example['segment'] == b'Mens E-Mail' or example['segment'] == b'Womens E-Mail' else 0

outcome = example['conversion']

# Restrict the dataset to the input features.

input_features = ['channel', 'history', 'mens', 'womens', 'newbie', 'recency', 'zip_code', 'treatment']

example = {feature: example[feature] for feature in input_features}

return example, outcome

train_ds = raw_train.map(prepare_dataset).batch(100)

test_ds = raw_test.map(prepare_dataset).batch(100)

模型训练

最后,像往常一样训练和评估模型。请注意,TF-DF 仅支持用于提升的随机森林模型。

%set_cell_height 300

# Configure the model and its hyper-parameters.

model = tfdf.keras.RandomForestModel(

verbose=2,

task=tfdf.keras.Task.CATEGORICAL_UPLIFT,

uplift_treatment='treatment'

)

# Train the model.

model.fit(train_ds)

<IPython.core.display.Javascript object>

Warning: The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

WARNING:absl:The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

Use /tmpfs/tmp/tmppyeh4gae as temporary training directory

Reading training dataset...

Training tensor examples:

Features: {'channel': <tf.Tensor 'data:0' shape=(None,) dtype=string>, 'history': <tf.Tensor 'data_1:0' shape=(None,) dtype=float32>, 'mens': <tf.Tensor 'data_2:0' shape=(None,) dtype=int64>, 'womens': <tf.Tensor 'data_3:0' shape=(None,) dtype=int64>, 'newbie': <tf.Tensor 'data_4:0' shape=(None,) dtype=int64>, 'recency': <tf.Tensor 'data_5:0' shape=(None,) dtype=int64>, 'zip_code': <tf.Tensor 'data_6:0' shape=(None,) dtype=string>, 'treatment': <tf.Tensor 'data_7:0' shape=(None,) dtype=int32>}

Label: Tensor("data_8:0", shape=(None,), dtype=int64)

Weights: None

Normalized tensor features:

{'channel': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data:0' shape=(None,) dtype=string>), 'history': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'data_1:0' shape=(None,) dtype=float32>), 'mens': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast:0' shape=(None,) dtype=float32>), 'womens': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_1:0' shape=(None,) dtype=float32>), 'newbie': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_2:0' shape=(None,) dtype=float32>), 'recency': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_3:0' shape=(None,) dtype=float32>), 'zip_code': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_6:0' shape=(None,) dtype=string>)}

Training dataset read in 0:00:04.974222. Found 51200 examples.

Training model...

Standard output detected as not visible to the user e.g. running in a notebook. Creating a training log redirection. If training gets stuck, try calling tfdf.keras.set_training_logs_redirection(False).

[INFO 24-04-20 11:22:14.2334 UTC kernel.cc:771] Start Yggdrasil model training

[INFO 24-04-20 11:22:14.2335 UTC kernel.cc:772] Collect training examples

[INFO 24-04-20 11:22:14.2335 UTC kernel.cc:785] Dataspec guide:

column_guides {

column_name_pattern: "^__LABEL$"

type: CATEGORICAL

}

default_column_guide {

categorial {

max_vocab_count: 2000

}

discretized_numerical {

maximum_num_bins: 255

}

}

ignore_columns_without_guides: false

detect_numerical_as_discretized_numerical: false

[INFO 24-04-20 11:22:14.2339 UTC kernel.cc:391] Number of batches: 512

[INFO 24-04-20 11:22:14.2339 UTC kernel.cc:392] Number of examples: 51200

[INFO 24-04-20 11:22:14.2463 UTC kernel.cc:792] Training dataset:

Number of records: 51200

Number of columns: 9

Number of columns by type:

NUMERICAL: 5 (55.5556%)

CATEGORICAL: 4 (44.4444%)

Columns:

NUMERICAL: 5 (55.5556%)

2: "history" NUMERICAL mean:241.833 min:29.99 max:3345.93 sd:255.292

3: "mens" NUMERICAL mean:0.550391 min:0 max:1 sd:0.497454

4: "newbie" NUMERICAL mean:0.503086 min:0 max:1 sd:0.49999

5: "recency" NUMERICAL mean:5.75514 min:1 max:12 sd:3.50281

7: "womens" NUMERICAL mean:0.549687 min:0 max:1 sd:0.497525

CATEGORICAL: 4 (44.4444%)

0: "__LABEL" CATEGORICAL integerized vocab-size:3 no-ood-item

1: "channel" CATEGORICAL has-dict vocab-size:4 zero-ood-items most-frequent:"Web" 22576 (44.0938%)

6: "treatment" CATEGORICAL integerized vocab-size:3 no-ood-item

8: "zip_code" CATEGORICAL has-dict vocab-size:4 zero-ood-items most-frequent:"Surburban" 22966 (44.8555%)

Terminology:

nas: Number of non-available (i.e. missing) values.

ood: Out of dictionary.

manually-defined: Attribute whose type is manually defined by the user, i.e., the type was not automatically inferred.

tokenized: The attribute value is obtained through tokenization.

has-dict: The attribute is attached to a string dictionary e.g. a categorical attribute stored as a string.

vocab-size: Number of unique values.

[INFO 24-04-20 11:22:14.2464 UTC kernel.cc:808] Configure learner

[INFO 24-04-20 11:22:14.2466 UTC kernel.cc:822] Training config:

learner: "RANDOM_FOREST"

features: "^channel$"

features: "^history$"

features: "^mens$"

features: "^newbie$"

features: "^recency$"

features: "^womens$"

features: "^zip_code$"

label: "^__LABEL$"

task: CATEGORICAL_UPLIFT

random_seed: 123456

uplift_treatment: "treatment"

metadata {

framework: "TF Keras"

}

pure_serving_model: false

[yggdrasil_decision_forests.model.random_forest.proto.random_forest_config] {

num_trees: 300

decision_tree {

max_depth: 16

min_examples: 5

in_split_min_examples_check: true

keep_non_leaf_label_distribution: true

num_candidate_attributes: 0

missing_value_policy: GLOBAL_IMPUTATION

allow_na_conditions: false

categorical_set_greedy_forward {

sampling: 0.1

max_num_items: -1

min_item_frequency: 1

}

growing_strategy_local {

}

categorical {

cart {

}

}

axis_aligned_split {

}

internal {

sorting_strategy: PRESORTED

}

uplift {

min_examples_in_treatment: 5

split_score: KULLBACK_LEIBLER

}

}

winner_take_all_inference: true

compute_oob_performances: true

compute_oob_variable_importances: false

num_oob_variable_importances_permutations: 1

bootstrap_training_dataset: true

bootstrap_size_ratio: 1

adapt_bootstrap_size_ratio_for_maximum_training_duration: false

sampling_with_replacement: true

}

[INFO 24-04-20 11:22:14.2469 UTC kernel.cc:825] Deployment config:

cache_path: "/tmpfs/tmp/tmppyeh4gae/working_cache"

num_threads: 32

try_resume_training: true

[INFO 24-04-20 11:22:14.2472 UTC kernel.cc:887] Train model

[INFO 24-04-20 11:22:14.2473 UTC random_forest.cc:416] Training random forest on 51200 example(s) and 7 feature(s).

[WARNING 24-04-20 11:22:14.3731 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.3741 UTC random_forest.cc:802] Training of tree 1/300 (tree index:2) done qini:0.000172044 auuc:0.0025137

[WARNING 24-04-20 11:22:14.4012 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.4027 UTC random_forest.cc:802] Training of tree 15/300 (tree index:31) done qini:1.41341e-05 auuc:0.0023575

[WARNING 24-04-20 11:22:14.5302 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.5327 UTC random_forest.cc:802] Training of tree 25/300 (tree index:23) done qini:-2.19346e-05 auuc:0.00235455

[WARNING 24-04-20 11:22:14.6034 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.6058 UTC random_forest.cc:802] Training of tree 35/300 (tree index:33) done qini:0.00013211 auuc:0.0025086

[WARNING 24-04-20 11:22:14.6887 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.6910 UTC random_forest.cc:802] Training of tree 45/300 (tree index:45) done qini:-2.28572e-05 auuc:0.00235363

[WARNING 24-04-20 11:22:14.7656 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.7680 UTC random_forest.cc:802] Training of tree 55/300 (tree index:55) done qini:-8.67727e-05 auuc:0.00228972

[WARNING 24-04-20 11:22:14.8354 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.8379 UTC random_forest.cc:802] Training of tree 65/300 (tree index:56) done qini:-0.000112323 auuc:0.00226417

[WARNING 24-04-20 11:22:14.9052 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.9077 UTC random_forest.cc:802] Training of tree 75/300 (tree index:74) done qini:-0.000109942 auuc:0.00226655

[WARNING 24-04-20 11:22:14.9680 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:14.9704 UTC random_forest.cc:802] Training of tree 101/300 (tree index:101) done qini:-0.000112409 auuc:0.00226408

[WARNING 24-04-20 11:22:15.1148 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.1196 UTC random_forest.cc:802] Training of tree 121/300 (tree index:118) done qini:-0.000299795 auuc:0.00207669

[WARNING 24-04-20 11:22:15.2280 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.2305 UTC random_forest.cc:802] Training of tree 131/300 (tree index:138) done qini:-0.000153133 auuc:0.00222336

[WARNING 24-04-20 11:22:15.3108 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.3155 UTC random_forest.cc:802] Training of tree 141/300 (tree index:139) done qini:-0.000173194 auuc:0.0022033

[WARNING 24-04-20 11:22:15.3853 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.3877 UTC random_forest.cc:802] Training of tree 168/300 (tree index:162) done qini:-0.000130945 auuc:0.00224554

[WARNING 24-04-20 11:22:15.5471 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.5519 UTC random_forest.cc:802] Training of tree 178/300 (tree index:178) done qini:-0.000145457 auuc:0.00223103

[WARNING 24-04-20 11:22:15.6367 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.6414 UTC random_forest.cc:802] Training of tree 188/300 (tree index:189) done qini:-0.000124566 auuc:0.00225192

[WARNING 24-04-20 11:22:15.6876 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.6901 UTC random_forest.cc:802] Training of tree 217/300 (tree index:213) done qini:-0.000161956 auuc:0.00221453

[WARNING 24-04-20 11:22:15.8731 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.8795 UTC random_forest.cc:802] Training of tree 227/300 (tree index:229) done qini:-0.000133605 auuc:0.00224288

[WARNING 24-04-20 11:22:15.9403 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:15.9428 UTC random_forest.cc:802] Training of tree 237/300 (tree index:239) done qini:-0.000101549 auuc:0.00227494

[WARNING 24-04-20 11:22:16.0044 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.0068 UTC random_forest.cc:802] Training of tree 247/300 (tree index:253) done qini:-0.000141334 auuc:0.00223516

[WARNING 24-04-20 11:22:16.0749 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.0773 UTC random_forest.cc:802] Training of tree 257/300 (tree index:257) done qini:-0.000135416 auuc:0.00224107

[WARNING 24-04-20 11:22:16.1446 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.1471 UTC random_forest.cc:802] Training of tree 267/300 (tree index:261) done qini:-0.000131112 auuc:0.00224538

[WARNING 24-04-20 11:22:16.2109 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.2132 UTC random_forest.cc:802] Training of tree 277/300 (tree index:275) done qini:-0.000149751 auuc:0.00222674

[WARNING 24-04-20 11:22:16.2724 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.2746 UTC random_forest.cc:802] Training of tree 287/300 (tree index:283) done qini:-0.000168736 auuc:0.00220775

[WARNING 24-04-20 11:22:16.3282 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.3306 UTC random_forest.cc:802] Training of tree 297/300 (tree index:299) done qini:-0.000181665 auuc:0.00219482

[WARNING 24-04-20 11:22:16.3623 UTC random_forest.cc:1105] Internal error: Non empty oob evaluation

[INFO 24-04-20 11:22:16.3646 UTC random_forest.cc:802] Training of tree 300/300 (tree index:298) done qini:-0.000173258 auuc:0.00220323

[INFO 24-04-20 11:22:16.3680 UTC random_forest.cc:882] Final OOB metrics: qini:-0.000173258 auuc:0.00220323

[INFO 24-04-20 11:22:16.3843 UTC kernel.cc:919] Export model in log directory: /tmpfs/tmp/tmppyeh4gae with prefix 568256236db544eb

[INFO 24-04-20 11:22:16.4274 UTC kernel.cc:937] Save model in resources

[INFO 24-04-20 11:22:16.4309 UTC abstract_model.cc:881] Model self evaluation:

Number of predictions (without weights): 51200

Number of predictions (with weights): 51200

Task: CATEGORICAL_UPLIFT

Label: __LABEL

Number of treatments: 2

AUUC: 0.00220323

Qini: -0.000173258

[INFO 24-04-20 11:22:16.4580 UTC kernel.cc:1233] Loading model from path /tmpfs/tmp/tmppyeh4gae/model/ with prefix 568256236db544eb

[INFO 24-04-20 11:22:16.6557 UTC decision_forest.cc:734] Model loaded with 300 root(s), 60190 node(s), and 7 input feature(s).

[INFO 24-04-20 11:22:16.6557 UTC abstract_model.cc:1344] Engine "RandomForestGeneric" built

[INFO 24-04-20 11:22:16.6557 UTC kernel.cc:1061] Use fast generic engine

Model trained in 0:00:02.442514

Compiling model...

Model compiled.

<tf_keras.src.callbacks.History at 0x7f5dd40eb7c0>

评估提升模型。

提升模型的指标

评估提升模型最重要的两个指标是 **AUUC**(提升曲线下面积)和 **Qini**(Qini 曲线下面积)。这类似于在分类问题中使用 AUC 和准确率。对于这两个指标,数值越大越好。

AUUC 和 Qini **不是** 归一化指标。这意味着指标的最佳可能值会因数据集而异。这与例如始终在 0 到 1 之间变化的 AUC 指标不同。

下面是 AUUC 的正式定义。有关这些指标的更多信息,请参阅 Guelman 和 Betlei 等人。

模型自我评估

TF-DF 随机森林模型在训练数据集的包外示例上执行自我评估。对于提升模型,它们会公开 AUUC 和 Qini 指标。您可以通过检查器直接检索训练数据集上的这两个指标。

稍后,我们将“手动”重新计算测试数据集上的 AUUC 指标。请注意,这两个指标预计不会完全相等(训练中的包外与测试),因为 AUUC 不是归一化指标。

# The self-evaluation is available through the model inspector

insp = model.make_inspector()

insp.evaluation()

Evaluation(num_examples=51200, accuracy=None, loss=None, rmse=None, ndcg=None, aucs=None, auuc=0.0022032308892709586, qini=-0.00017325819500263418)

手动计算 AUUC

在本节中,我们将手动计算 AUUC 并绘制提升曲线。

接下来的几段将更详细地解释 AUUC 指标,可以跳过。

计算 AUUC

假设您有一个带标签的数据集,其中包含 \(|T|\) 个带有处理的示例和 \(|C|\) 个没有处理的示例,称为控制示例。对于每个示例,提升模型 \(f\) 会生成一个条件概率,即对该示例进行处理将产生积极结果。

假设决策者需要决定使用提升模型 \(f\) 向哪些客户发送电子邮件。该模型会生成一个(条件)概率,即电子邮件将导致转化。因此,决策者可能会选择要发送的电子邮件数量 \(k\),并将这 \(k\) 封电子邮件发送给概率最高的客户。

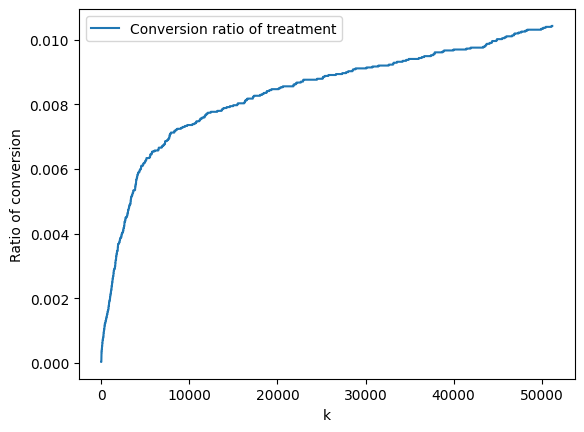

使用带标签的测试数据集,可以研究 \(k\) 对活动成功的影响。首先,我们对收到电子邮件并转化的客户与收到电子邮件的客户总数的比率 \(\frac{|C \cap T|}{|T|}\) 感兴趣。这里 \(C\) 是收到电子邮件并转化的客户集合,\(T\) 是收到电子邮件的客户总数。我们将此比率相对于 \(k\) 绘制。

理想情况下,我们希望这条曲线急剧上升。这意味着该模型优先将电子邮件发送给那些在收到电子邮件时会产生转化的客户。

# Compute all predictions on the test dataset

predictions = model.predict(test_ds).flatten()

# Extract outcomes and treatments

outcomes = np.concatenate([outcome.numpy() for _, outcome in test_ds])

treatment = np.concatenate([example['treatment'].numpy() for example,_ in test_ds])

control = 1 - treatment

num_treatments = np.sum(treatment)

# Clients without treatment are called 'control' group

num_control = np.sum(control)

num_examples = len(predictions)

# Sort labels and treatments according to predictions in descending order

prediction_order = predictions.argsort()[::-1]

outcomes_sorted = outcomes[prediction_order]

treatment_sorted = treatment[prediction_order]

control_sorted = control[prediction_order]

ratio_treatment = np.cumsum(np.multiply(outcomes_sorted, treatment_sorted), axis=0)/num_treatments

fig, ax = plt.subplots()

ax.plot(ratio_treatment, label='Conversion ratio of treatment')

ax.set_xlabel('k')

ax.set_ylabel('Ratio of conversion')

ax.legend()

512/512 [==============================] - 3s 5ms/step 2024-04-20 11:22:25.165808: W tensorflow/core/framework/local_rendezvous.cc:404] Local rendezvous is aborting with status: OUT_OF_RANGE: End of sequence 2024-04-20 11:22:25.975008: W tensorflow/core/framework/local_rendezvous.cc:404] Local rendezvous is aborting with status: OUT_OF_RANGE: End of sequence <matplotlib.legend.Legend at 0x7f5cbc41ac70>

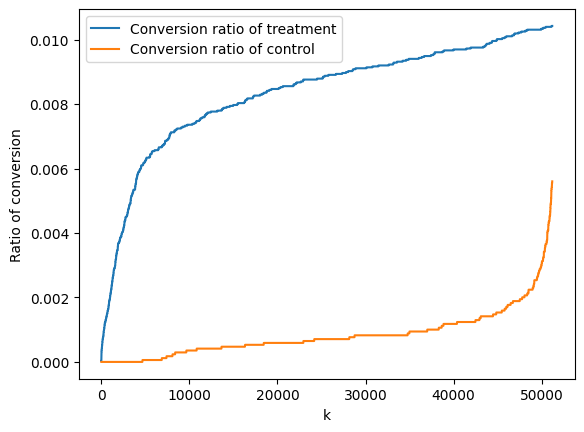

类似地,我们还可以计算并绘制那些没有收到电子邮件的客户(称为控制组)的转化率。理想情况下,这条曲线最初是平坦的:这意味着该模型不会优先将电子邮件发送给那些即使**没有**收到电子邮件也会产生转化的客户。

ratio_control = np.cumsum(np.multiply(outcomes_sorted, control_sorted), axis=0)/num_control

ax.plot(ratio_control, label='Conversion ratio of control')

ax.legend()

fig

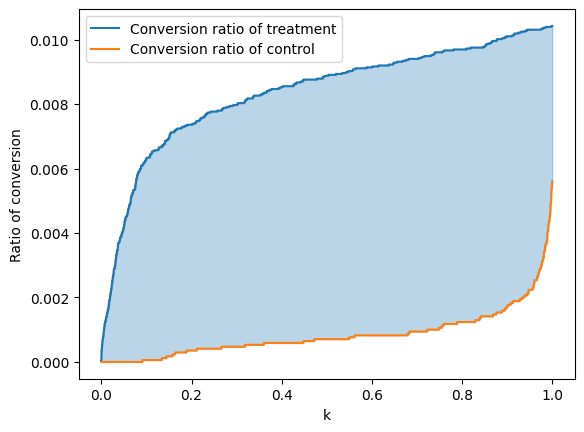

AUUC 指标测量这两条曲线之间的面积,将 y 轴归一化到 0 到 1 之间。

x = np.linspace(0, 1, num_examples)

plt.plot(x,ratio_treatment, label='Conversion ratio of treatment')

plt.plot(x,ratio_control, label='Conversion ratio of control')

plt.fill_between(x, ratio_treatment, ratio_control, where=(ratio_treatment > ratio_control), color='C0', alpha=0.3)

plt.fill_between(x, ratio_treatment, ratio_control, where=(ratio_treatment < ratio_control), color='C1', alpha=0.3)

plt.xlabel('k')

plt.ylabel('Ratio of conversion')

plt.legend()

# Approximate the integral of the difference between the two curves.

auuc = np.trapz(ratio_treatment-ratio_control, dx=1/num_examples)

print(f'The AUUC on the test dataset is {auuc}')

The AUUC on the test dataset is 0.007513928513572819