在 TensorFlow.org 上查看 在 TensorFlow.org 上查看

|

在 Google Colab 中运行 在 Google Colab 中运行

|

在 GitHub 上查看 在 GitHub 上查看

|

下载笔记本 下载笔记本

|

Keras 函数式 API Keras 函数式 API

|

简介

欢迎来到 TensorFlow 决策森林 (TF-DF) 的模型组合教程。此笔记本向您展示如何使用通用预处理层和Keras 函数式 API将多个决策森林和神经网络模型组合在一起。

您可能希望将模型组合在一起以提高预测性能(集成),以充分利用不同的建模技术(异构模型集成),在不同的数据集上训练模型的不同部分(例如预训练),或者创建一个堆叠模型(例如,一个模型对另一个模型的预测进行操作)。

本教程涵盖了使用函数式 API 进行模型组合的高级用例。您可以在本教程的“特征预处理”部分以及本教程的“使用预训练文本嵌入”部分找到更简单模型组合场景的示例。

以下是您将构建的模型结构

您的组合模型包含三个阶段

- 第一阶段是一个预处理层,由一个神经网络组成,对下一阶段的所有模型都是通用的。在实践中,这样的预处理层可以是用于微调的预训练嵌入,也可以是随机初始化的神经网络。

- 第二阶段是两个决策森林和两个神经网络模型的集成。

- 最后一阶段对第二阶段模型的预测进行平均。它不包含任何可学习的权重。

神经网络使用反向传播算法和梯度下降进行训练。该算法具有两个重要属性:(1)如果神经网络层接收到损失梯度(更准确地说,是损失相对于该层输出的梯度),则可以训练该层,以及(2)该算法将损失梯度从该层的输出“传递”到该层的输入(这就是“链式法则”)。由于这两个原因,反向传播可以一起训练多个相互堆叠的神经网络层。

在本例中,决策森林使用随机森林 (RF) 算法进行训练。与反向传播不同,RF 的训练不会将损失梯度从其输出“传递”到其输入。因此,传统的 RF 算法不能用于训练或微调其下方的神经网络。换句话说,“决策森林”阶段不能用于训练“可学习的 NN 预处理块”。

- 训练预处理和神经网络阶段。

- 训练决策森林阶段。

安装 TensorFlow 决策森林

通过运行以下单元格来安装 TF-DF。

pip install tensorflow_decision_forests -U --quiet

Wurlitzer 对于在 Colabs 中显示详细的训练日志(当在模型构造函数中使用verbose=2时)是必需的。

pip install wurlitzer -U --quiet

导入库

import os

# Keep using Keras 2

os.environ['TF_USE_LEGACY_KERAS'] = '1'

import tensorflow_decision_forests as tfdf

import numpy as np

import pandas as pd

import tensorflow as tf

import tf_keras

import math

import matplotlib.pyplot as plt

数据集

在本教程中,您将使用一个简单的合成数据集,以便更容易地解释最终模型。

def make_dataset(num_examples, num_features, seed=1234):

np.random.seed(seed)

features = np.random.uniform(-1, 1, size=(num_examples, num_features))

noise = np.random.uniform(size=(num_examples))

left_side = np.sqrt(

np.sum(np.multiply(np.square(features[:, 0:2]), [1, 2]), axis=1))

right_side = features[:, 2] * 0.7 + np.sin(

features[:, 3] * 10) * 0.5 + noise * 0.0 + 0.5

labels = left_side <= right_side

return features, labels.astype(int)

生成一些示例

make_dataset(num_examples=5, num_features=4)

(array([[-0.6169611 , 0.24421754, -0.12454452, 0.57071717],

[ 0.55995162, -0.45481479, -0.44707149, 0.60374436],

[ 0.91627871, 0.75186527, -0.28436546, 0.00199025],

[ 0.36692587, 0.42540405, -0.25949849, 0.12239237],

[ 0.00616633, -0.9724631 , 0.54565324, 0.76528238]]),

array([0, 0, 0, 1, 0]))

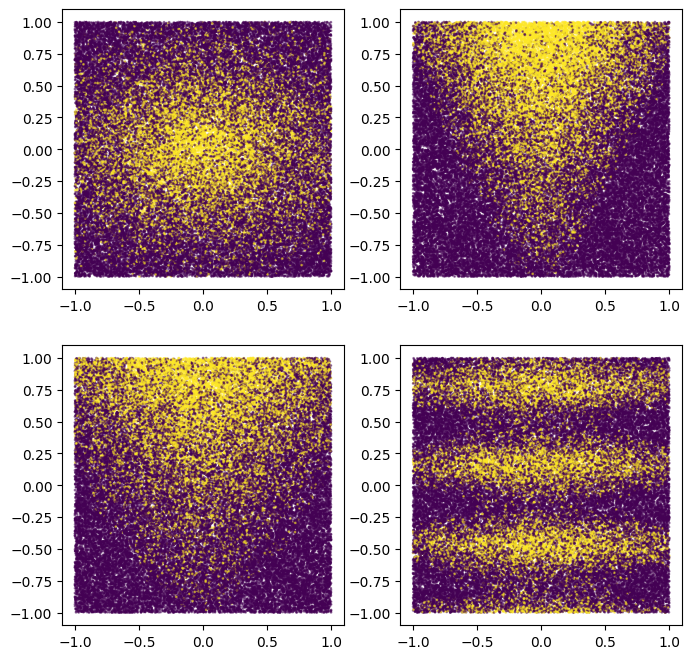

您也可以绘制它们以了解合成模式

plot_features, plot_label = make_dataset(num_examples=50000, num_features=4)

plt.rcParams["figure.figsize"] = [8, 8]

common_args = dict(c=plot_label, s=1.0, alpha=0.5)

plt.subplot(2, 2, 1)

plt.scatter(plot_features[:, 0], plot_features[:, 1], **common_args)

plt.subplot(2, 2, 2)

plt.scatter(plot_features[:, 1], plot_features[:, 2], **common_args)

plt.subplot(2, 2, 3)

plt.scatter(plot_features[:, 0], plot_features[:, 2], **common_args)

plt.subplot(2, 2, 4)

plt.scatter(plot_features[:, 0], plot_features[:, 3], **common_args)

<matplotlib.collections.PathCollection at 0x7f4a523ddca0>

请注意,此模式是平滑的,并且没有与轴对齐。这将有利于神经网络模型。这是因为对于神经网络来说,拥有圆形且不对齐的决策边界比决策树更容易。

另一方面,我们将使用包含 2500 个示例的小型数据集训练模型。这将有利于决策森林模型。这是因为决策森林效率更高,利用了来自示例的所有可用信息(决策森林是“样本效率”)。

我们的神经网络和决策森林集成将结合两者的优势。

让我们创建一个训练和测试 tf.data.Dataset

def make_tf_dataset(batch_size=64, **args):

features, labels = make_dataset(**args)

return tf.data.Dataset.from_tensor_slices(

(features, labels)).batch(batch_size)

num_features = 10

train_dataset = make_tf_dataset(

num_examples=2500, num_features=num_features, batch_size=100, seed=1234)

test_dataset = make_tf_dataset(

num_examples=10000, num_features=num_features, batch_size=100, seed=5678)

模型结构

定义模型结构如下

# Input features.

raw_features = tf_keras.layers.Input(shape=(num_features,))

# Stage 1

# =======

# Common learnable pre-processing

preprocessor = tf_keras.layers.Dense(10, activation=tf.nn.relu6)

preprocess_features = preprocessor(raw_features)

# Stage 2

# =======

# Model #1: NN

m1_z1 = tf_keras.layers.Dense(5, activation=tf.nn.relu6)(preprocess_features)

m1_pred = tf_keras.layers.Dense(1, activation=tf.nn.sigmoid)(m1_z1)

# Model #2: NN

m2_z1 = tf_keras.layers.Dense(5, activation=tf.nn.relu6)(preprocess_features)

m2_pred = tf_keras.layers.Dense(1, activation=tf.nn.sigmoid)(m2_z1)

# Model #3: DF

model_3 = tfdf.keras.RandomForestModel(num_trees=1000, random_seed=1234)

m3_pred = model_3(preprocess_features)

# Model #4: DF

model_4 = tfdf.keras.RandomForestModel(

num_trees=1000,

#split_axis="SPARSE_OBLIQUE", # Uncomment this line to increase the quality of this model

random_seed=4567)

m4_pred = model_4(preprocess_features)

# Since TF-DF uses deterministic learning algorithms, you should set the model's

# training seed to different values otherwise both

# `tfdf.keras.RandomForestModel` will be exactly the same.

# Stage 3

# =======

mean_nn_only = tf.reduce_mean(tf.stack([m1_pred, m2_pred], axis=0), axis=0)

mean_nn_and_df = tf.reduce_mean(

tf.stack([m1_pred, m2_pred, m3_pred, m4_pred], axis=0), axis=0)

# Keras Models

# ============

ensemble_nn_only = tf_keras.models.Model(raw_features, mean_nn_only)

ensemble_nn_and_df = tf_keras.models.Model(raw_features, mean_nn_and_df)

Warning: The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

WARNING:absl:The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

Use /tmpfs/tmp/tmp5rudwfpk as temporary training directory

Warning: The model was called directly (i.e. using `model(data)` instead of using `model.predict(data)`) before being trained. The model will only return zeros until trained. The output shape might change after training Tensor("inputs:0", shape=(None, 10), dtype=float32)

WARNING:absl:The model was called directly (i.e. using `model(data)` instead of using `model.predict(data)`) before being trained. The model will only return zeros until trained. The output shape might change after training Tensor("inputs:0", shape=(None, 10), dtype=float32)

Warning: The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

WARNING:absl:The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

Use /tmpfs/tmp/tmp7z3k5oog as temporary training directory

Warning: The model was called directly (i.e. using `model(data)` instead of using `model.predict(data)`) before being trained. The model will only return zeros until trained. The output shape might change after training Tensor("inputs:0", shape=(None, 10), dtype=float32)

WARNING:absl:The model was called directly (i.e. using `model(data)` instead of using `model.predict(data)`) before being trained. The model will only return zeros until trained. The output shape might change after training Tensor("inputs:0", shape=(None, 10), dtype=float32)

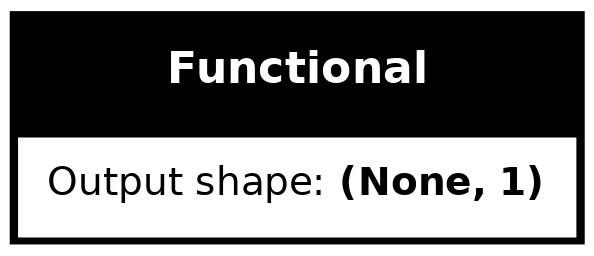

在训练模型之前,您可以绘制模型以检查它是否与初始图表相似。

from keras.utils import plot_model

plot_model(ensemble_nn_and_df, to_file="/tmp/model.png", show_shapes=True)

模型训练

首先使用反向传播算法训练预处理和两个神经网络层。

%%time

ensemble_nn_only.compile(

optimizer=tf_keras.optimizers.Adam(),

loss=tf_keras.losses.BinaryCrossentropy(),

metrics=["accuracy"])

ensemble_nn_only.fit(train_dataset, epochs=20, validation_data=test_dataset)

Epoch 1/20 WARNING: All log messages before absl::InitializeLog() is called are written to STDERR I0000 00:00:1713611529.601020 11701 service.cc:145] XLA service 0x7f48904d48d0 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices: I0000 00:00:1713611529.601060 11701 service.cc:153] StreamExecutor device (0): Tesla T4, Compute Capability 7.5 I0000 00:00:1713611529.601064 11701 service.cc:153] StreamExecutor device (1): Tesla T4, Compute Capability 7.5 I0000 00:00:1713611529.601067 11701 service.cc:153] StreamExecutor device (2): Tesla T4, Compute Capability 7.5 I0000 00:00:1713611529.601069 11701 service.cc:153] StreamExecutor device (3): Tesla T4, Compute Capability 7.5 I0000 00:00:1713611529.771938 11701 device_compiler.h:188] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process. 25/25 [==============================] - 15s 33ms/step - loss: 0.5756 - accuracy: 0.7500 - val_loss: 0.5714 - val_accuracy: 0.7392 Epoch 2/20 25/25 [==============================] - 0s 11ms/step - loss: 0.5524 - accuracy: 0.7500 - val_loss: 0.5543 - val_accuracy: 0.7392 Epoch 3/20 25/25 [==============================] - 0s 10ms/step - loss: 0.5351 - accuracy: 0.7500 - val_loss: 0.5406 - val_accuracy: 0.7392 Epoch 4/20 25/25 [==============================] - 0s 10ms/step - loss: 0.5209 - accuracy: 0.7500 - val_loss: 0.5287 - val_accuracy: 0.7392 Epoch 5/20 25/25 [==============================] - 0s 10ms/step - loss: 0.5083 - accuracy: 0.7500 - val_loss: 0.5176 - val_accuracy: 0.7392 Epoch 6/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4967 - accuracy: 0.7500 - val_loss: 0.5072 - val_accuracy: 0.7392 Epoch 7/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4856 - accuracy: 0.7512 - val_loss: 0.4973 - val_accuracy: 0.7397 Epoch 8/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4753 - accuracy: 0.7524 - val_loss: 0.4882 - val_accuracy: 0.7421 Epoch 9/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4658 - accuracy: 0.7572 - val_loss: 0.4799 - val_accuracy: 0.7454 Epoch 10/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4572 - accuracy: 0.7600 - val_loss: 0.4726 - val_accuracy: 0.7500 Epoch 11/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4498 - accuracy: 0.7668 - val_loss: 0.4664 - val_accuracy: 0.7575 Epoch 12/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4435 - accuracy: 0.7748 - val_loss: 0.4612 - val_accuracy: 0.7637 Epoch 13/20 25/25 [==============================] - 0s 11ms/step - loss: 0.4382 - accuracy: 0.7780 - val_loss: 0.4569 - val_accuracy: 0.7678 Epoch 14/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4339 - accuracy: 0.7832 - val_loss: 0.4534 - val_accuracy: 0.7702 Epoch 15/20 25/25 [==============================] - 0s 11ms/step - loss: 0.4302 - accuracy: 0.7888 - val_loss: 0.4504 - val_accuracy: 0.7731 Epoch 16/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4269 - accuracy: 0.7920 - val_loss: 0.4477 - val_accuracy: 0.7768 Epoch 17/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4239 - accuracy: 0.7924 - val_loss: 0.4452 - val_accuracy: 0.7786 Epoch 18/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4211 - accuracy: 0.7936 - val_loss: 0.4429 - val_accuracy: 0.7800 Epoch 19/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4184 - accuracy: 0.7976 - val_loss: 0.4406 - val_accuracy: 0.7819 Epoch 20/20 25/25 [==============================] - 0s 10ms/step - loss: 0.4158 - accuracy: 0.7992 - val_loss: 0.4382 - val_accuracy: 0.7836 CPU times: user 20.7 s, sys: 1.36 s, total: 22 s Wall time: 19.6 s <tf_keras.src.callbacks.History at 0x7f4a4c0fa3a0>

让我们评估预处理和仅包含两个神经网络的部分。

evaluation_nn_only = ensemble_nn_only.evaluate(test_dataset, return_dict=True)

print("Accuracy (NN #1 and #2 only): ", evaluation_nn_only["accuracy"])

print("Loss (NN #1 and #2 only): ", evaluation_nn_only["loss"])

100/100 [==============================] - 0s 2ms/step - loss: 0.4382 - accuracy: 0.7836 Accuracy (NN #1 and #2 only): 0.7835999727249146 Loss (NN #1 and #2 only): 0.438231498003006

让我们训练两个决策森林组件(一个接一个)。

%%time

train_dataset_with_preprocessing = train_dataset.map(lambda x,y: (preprocessor(x), y))

test_dataset_with_preprocessing = test_dataset.map(lambda x,y: (preprocessor(x), y))

model_3.fit(train_dataset_with_preprocessing)

model_4.fit(train_dataset_with_preprocessing)

WARNING:tensorflow:AutoGraph could not transform <function <lambda> at 0x7f4b7b5d91f0> and will run it as-is. Cause: could not parse the source code of <function <lambda> at 0x7f4b7b5d91f0>: no matching AST found among candidates: To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING:tensorflow:AutoGraph could not transform <function <lambda> at 0x7f4b7b5d91f0> and will run it as-is. Cause: could not parse the source code of <function <lambda> at 0x7f4b7b5d91f0>: no matching AST found among candidates: To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform <function <lambda> at 0x7f4b7b5d91f0> and will run it as-is. Cause: could not parse the source code of <function <lambda> at 0x7f4b7b5d91f0>: no matching AST found among candidates: To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING:tensorflow:AutoGraph could not transform <function <lambda> at 0x7f4b7ba3eee0> and will run it as-is. Cause: could not parse the source code of <function <lambda> at 0x7f4b7ba3eee0>: no matching AST found among candidates: To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING:tensorflow:AutoGraph could not transform <function <lambda> at 0x7f4b7ba3eee0> and will run it as-is. Cause: could not parse the source code of <function <lambda> at 0x7f4b7ba3eee0>: no matching AST found among candidates: To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert WARNING: AutoGraph could not transform <function <lambda> at 0x7f4b7ba3eee0> and will run it as-is. Cause: could not parse the source code of <function <lambda> at 0x7f4b7ba3eee0>: no matching AST found among candidates: To silence this warning, decorate the function with @tf.autograph.experimental.do_not_convert Reading training dataset... Training dataset read in 0:00:03.699343. Found 2500 examples. Training model... [INFO 24-04-20 11:12:20.9165 UTC kernel.cc:1233] Loading model from path /tmpfs/tmp/tmp5rudwfpk/model/ with prefix 3da3a8649d9e4cb4 Model trained in 0:00:02.061496 Compiling model... [INFO 24-04-20 11:12:22.0091 UTC decision_forest.cc:734] Model loaded with 1000 root(s), 355690 node(s), and 10 input feature(s). [INFO 24-04-20 11:12:22.0091 UTC abstract_model.cc:1344] Engine "RandomForestOptPred" built [INFO 24-04-20 11:12:22.0091 UTC kernel.cc:1061] Use fast generic engine Model compiled. Reading training dataset... Training dataset read in 0:00:00.231041. Found 2500 examples. Training model... [INFO 24-04-20 11:12:23.7301 UTC kernel.cc:1233] Loading model from path /tmpfs/tmp/tmp7z3k5oog/model/ with prefix 8b0da5e131c043b2 Model trained in 0:00:01.916593 Compiling model... [INFO 24-04-20 11:12:24.7575 UTC decision_forest.cc:734] Model loaded with 1000 root(s), 357028 node(s), and 10 input feature(s). [INFO 24-04-20 11:12:24.7576 UTC kernel.cc:1061] Use fast generic engine Model compiled. CPU times: user 22.9 s, sys: 1.73 s, total: 24.6 s Wall time: 8.74 s <tf_keras.src.callbacks.History at 0x7f4b7b6fcee0>

让我们单独评估决策森林。

model_3.compile(["accuracy"])

model_4.compile(["accuracy"])

evaluation_df3_only = model_3.evaluate(

test_dataset_with_preprocessing, return_dict=True)

evaluation_df4_only = model_4.evaluate(

test_dataset_with_preprocessing, return_dict=True)

print("Accuracy (DF #3 only): ", evaluation_df3_only["accuracy"])

print("Accuracy (DF #4 only): ", evaluation_df4_only["accuracy"])

100/100 [==============================] - 2s 10ms/step - loss: 0.0000e+00 - accuracy: 0.7990 100/100 [==============================] - 1s 10ms/step - loss: 0.0000e+00 - accuracy: 0.7989 Accuracy (DF #3 only): 0.7990000247955322 Accuracy (DF #4 only): 0.7989000082015991

让我们评估整个模型组合。

ensemble_nn_and_df.compile(

loss=tf_keras.losses.BinaryCrossentropy(), metrics=["accuracy"])

evaluation_nn_and_df = ensemble_nn_and_df.evaluate(

test_dataset, return_dict=True)

print("Accuracy (2xNN and 2xDF): ", evaluation_nn_and_df["accuracy"])

print("Loss (2xNN and 2xDF): ", evaluation_nn_and_df["loss"])

100/100 [==============================] - 2s 10ms/step - loss: 0.4226 - accuracy: 0.7953 Accuracy (2xNN and 2xDF): 0.7953000068664551 Loss (2xNN and 2xDF): 0.4225609302520752

最后,让我们对神经网络层进行微调。请注意,我们不会微调预训练的嵌入,因为 DF 模型依赖于它(除非我们也重新训练它们)。

总之,您有

Accuracy (NN #1 and #2 only): 0.783600

Accuracy (DF #3 only): 0.799000

Accuracy (DF #4 only): 0.798900

----------------------------------------

Accuracy (2xNN and 2xDF): 0.795300

+0.011700 over NN #1 and #2 only

-0.003700 over DF #3 only

-0.003600 over DF #4 only

在这里,您可以看到组合模型的性能优于其各个部分。这就是集成如此有效的根本原因。

下一步是什么?

在本示例中,您看到了如何将决策森林与神经网络结合使用。下一步是进一步训练神经网络和决策森林。

此外,为了清晰起见,决策森林只接收了预处理后的输入。但是,决策森林通常非常擅长处理原始数据。通过将原始特征也馈送到决策森林模型中,可以改进模型。

在本示例中,最终模型是各个模型预测的平均值。如果所有模型的性能都差不多,这种解决方案效果很好。但是,如果其中一个子模型非常出色,将其与其他模型聚合实际上可能会造成损害(反之亦然;例如,尝试将示例数量从 1k 减少,看看它如何严重影响神经网络;或者在第二个随机森林模型中启用 SPARSE_OBLIQUE 拆分)。