在 TensorFlow.org 上查看 在 TensorFlow.org 上查看

|

在 Google Colab 中运行 在 Google Colab 中运行

|

在 GitHub 上查看源代码 在 GitHub 上查看源代码

|

下载笔记本 下载笔记本

|

学习目标

TensorFlow 模型 NLP 库 是一个用于构建和训练现代高性能自然语言模型的工具集合。

tfm.nlp.networks.EncoderScaffold 是该库的核心,并且提出了许多新的网络架构来改进编码器。在本 Colab 笔记本中,我们将学习如何自定义编码器以使用新的网络架构。

安装和导入

安装 TensorFlow Model Garden pip 包

tf-models-official是稳定的 Model Garden 包。请注意,它可能不包含tensorflow_modelsgithub 存储库中的最新更改。要包含最新更改,您可以安装tf-models-nightly,它是每天自动创建的 Model Garden 夜间包。pip将自动安装所有模型和依赖项。

pip install -q opencv-python

pip install -q tf-models-official

导入 Tensorflow 和其他库

import numpy as np

import tensorflow as tf

import tensorflow_models as tfm

nlp = tfm.nlp

2023-12-14 12:09:32.926415: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-12-14 12:09:32.926462: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-12-14 12:09:32.927992: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

规范 BERT 编码器

在学习如何自定义编码器之前,让我们首先创建一个规范的 BERT 编码器,并使用它来实例化一个 bert_classifier.BertClassifier 用于分类任务。

cfg = {

"vocab_size": 100,

"hidden_size": 32,

"num_layers": 3,

"num_attention_heads": 4,

"intermediate_size": 64,

"activation": tfm.utils.activations.gelu,

"dropout_rate": 0.1,

"attention_dropout_rate": 0.1,

"max_sequence_length": 16,

"type_vocab_size": 2,

"initializer": tf.keras.initializers.TruncatedNormal(stddev=0.02),

}

bert_encoder = nlp.networks.BertEncoder(**cfg)

def build_classifier(bert_encoder):

return nlp.models.BertClassifier(bert_encoder, num_classes=2)

canonical_classifier_model = build_classifier(bert_encoder)

canonical_classifier_model 可以使用训练数据进行训练。有关如何训练模型的详细信息,请参阅 微调 bert 笔记本。我们在此省略训练模型的代码。

训练后,我们可以应用模型进行预测。

def predict(model):

batch_size = 3

np.random.seed(0)

word_ids = np.random.randint(

cfg["vocab_size"], size=(batch_size, cfg["max_sequence_length"]))

mask = np.random.randint(2, size=(batch_size, cfg["max_sequence_length"]))

type_ids = np.random.randint(

cfg["type_vocab_size"], size=(batch_size, cfg["max_sequence_length"]))

print(model([word_ids, mask, type_ids], training=False))

predict(canonical_classifier_model)

tf.Tensor( [[ 0.03545166 0.30729884] [ 0.00677404 0.17251147] [-0.07276718 0.17345032]], shape=(3, 2), dtype=float32)

自定义 BERT 编码器

一个 BERT 编码器由一个嵌入网络和多个 Transformer 块组成,每个 Transformer 块包含一个注意力层和一个前馈层。

我们提供通过 (1) EncoderScaffold 和 (2) TransformerScaffold 自定义这些组件的简便方法。

使用 EncoderScaffold

networks.EncoderScaffold 允许用户提供一个自定义嵌入子网络(它将替换标准嵌入逻辑)和/或一个自定义隐藏层类(它将替换编码器中的 Transformer 实例化)。

无自定义

没有任何自定义,networks.EncoderScaffold 的行为与规范的 networks.BertEncoder 相同。

如以下示例所示,networks.EncoderScaffold 可以加载 networks.BertEncoder 的权重并输出相同的值

default_hidden_cfg = dict(

num_attention_heads=cfg["num_attention_heads"],

intermediate_size=cfg["intermediate_size"],

intermediate_activation=cfg["activation"],

dropout_rate=cfg["dropout_rate"],

attention_dropout_rate=cfg["attention_dropout_rate"],

kernel_initializer=cfg["initializer"],

)

default_embedding_cfg = dict(

vocab_size=cfg["vocab_size"],

type_vocab_size=cfg["type_vocab_size"],

hidden_size=cfg["hidden_size"],

initializer=cfg["initializer"],

dropout_rate=cfg["dropout_rate"],

max_seq_length=cfg["max_sequence_length"]

)

default_kwargs = dict(

hidden_cfg=default_hidden_cfg,

embedding_cfg=default_embedding_cfg,

num_hidden_instances=cfg["num_layers"],

pooled_output_dim=cfg["hidden_size"],

return_all_layer_outputs=True,

pooler_layer_initializer=cfg["initializer"],

)

encoder_scaffold = nlp.networks.EncoderScaffold(**default_kwargs)

classifier_model_from_encoder_scaffold = build_classifier(encoder_scaffold)

classifier_model_from_encoder_scaffold.set_weights(

canonical_classifier_model.get_weights())

predict(classifier_model_from_encoder_scaffold)

WARNING:absl:The `Transformer` layer is deprecated. Please directly use `TransformerEncoderBlock`. WARNING:absl:The `Transformer` layer is deprecated. Please directly use `TransformerEncoderBlock`. WARNING:absl:The `Transformer` layer is deprecated. Please directly use `TransformerEncoderBlock`. tf.Tensor( [[ 0.03545166 0.30729884] [ 0.00677404 0.17251147] [-0.07276718 0.17345032]], shape=(3, 2), dtype=float32)

自定义嵌入

接下来,我们将展示如何使用自定义嵌入网络。

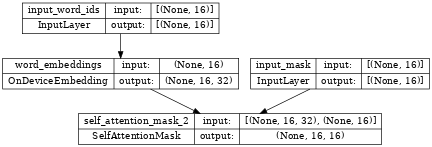

我们首先构建一个嵌入网络,它将替换默认网络。它将具有 2 个输入(mask 和 word_ids)而不是 3 个,并且不会使用位置嵌入。

word_ids = tf.keras.layers.Input(

shape=(cfg['max_sequence_length'],), dtype=tf.int32, name="input_word_ids")

mask = tf.keras.layers.Input(

shape=(cfg['max_sequence_length'],), dtype=tf.int32, name="input_mask")

embedding_layer = nlp.layers.OnDeviceEmbedding(

vocab_size=cfg['vocab_size'],

embedding_width=cfg['hidden_size'],

initializer=cfg["initializer"],

name="word_embeddings")

word_embeddings = embedding_layer(word_ids)

attention_mask = nlp.layers.SelfAttentionMask()([word_embeddings, mask])

new_embedding_network = tf.keras.Model([word_ids, mask],

[word_embeddings, attention_mask])

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/src/initializers/initializers.py:120: UserWarning: The initializer TruncatedNormal is unseeded and being called multiple times, which will return identical values each time (even if the initializer is unseeded). Please update your code to provide a seed to the initializer, or avoid using the same initializer instance more than once. warnings.warn(

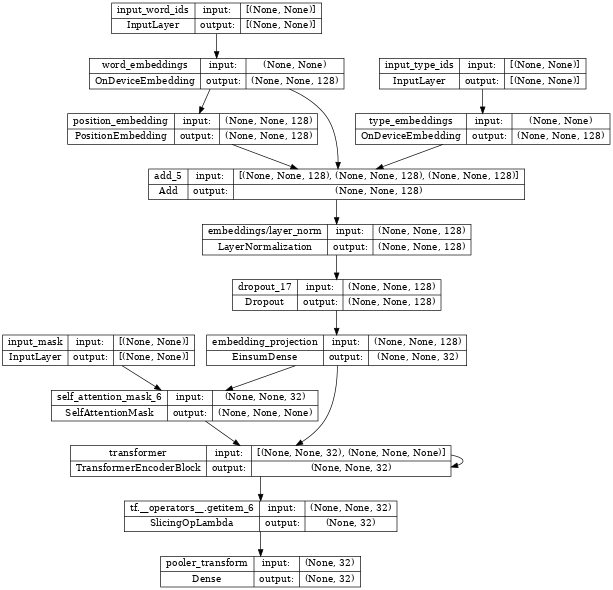

检查 new_embedding_network,我们可以看到它接受两个输入:input_word_ids 和 input_mask。

tf.keras.utils.plot_model(new_embedding_network, show_shapes=True, dpi=48)

然后,我们可以使用上面的 new_embedding_network 构建一个新的编码器。

kwargs = dict(default_kwargs)

# Use new embedding network.

kwargs['embedding_cls'] = new_embedding_network

kwargs['embedding_data'] = embedding_layer.embeddings

encoder_with_customized_embedding = nlp.networks.EncoderScaffold(**kwargs)

classifier_model = build_classifier(encoder_with_customized_embedding)

# ... Train the model ...

print(classifier_model.inputs)

# Assert that there are only two inputs.

assert len(classifier_model.inputs) == 2

WARNING:absl:The `Transformer` layer is deprecated. Please directly use `TransformerEncoderBlock`. WARNING:absl:The `Transformer` layer is deprecated. Please directly use `TransformerEncoderBlock`. WARNING:absl:The `Transformer` layer is deprecated. Please directly use `TransformerEncoderBlock`. /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/src/initializers/initializers.py:120: UserWarning: The initializer TruncatedNormal is unseeded and being called multiple times, which will return identical values each time (even if the initializer is unseeded). Please update your code to provide a seed to the initializer, or avoid using the same initializer instance more than once. warnings.warn( [<KerasTensor: shape=(None, 16) dtype=int32 (created by layer 'input_word_ids')>, <KerasTensor: shape=(None, 16) dtype=int32 (created by layer 'input_mask')>]

自定义 Transformer

用户还可以覆盖 networks.EncoderScaffold 构造函数中的 hidden_cls 参数,以使用自定义 Transformer 层。

请参阅 nlp.layers.ReZeroTransformer 的源代码,了解如何实现自定义 Transformer 层。

以下是如何使用 nlp.layers.ReZeroTransformer 的示例。

kwargs = dict(default_kwargs)

# Use ReZeroTransformer.

kwargs['hidden_cls'] = nlp.layers.ReZeroTransformer

encoder_with_rezero_transformer = nlp.networks.EncoderScaffold(**kwargs)

classifier_model = build_classifier(encoder_with_rezero_transformer)

# ... Train the model ...

predict(classifier_model)

# Assert that the variable `rezero_alpha` from ReZeroTransformer exists.

assert 'rezero_alpha' in ''.join([x.name for x in classifier_model.trainable_weights])

tf.Tensor( [[-0.08663296 0.09281035] [-0.07291833 0.36477187] [-0.08730186 0.1503254 ]], shape=(3, 2), dtype=float32)

使用 nlp.layers.TransformerScaffold

以上自定义模型的方法需要重写整个 nlp.layers.Transformer 层,而有时您可能只想自定义注意力层或前馈块。在这种情况下,可以使用 nlp.layers.TransformerScaffold。

自定义注意力层

用户还可以覆盖 layers.TransformerScaffold 构造函数中的 attention_cls 参数,以使用自定义注意力层。

请参阅 nlp.layers.TalkingHeadsAttention 的源代码,了解如何实现自定义 Attention 层。

以下是如何使用 nlp.layers.TalkingHeadsAttention 的示例。

# Use TalkingHeadsAttention

hidden_cfg = dict(default_hidden_cfg)

hidden_cfg['attention_cls'] = nlp.layers.TalkingHeadsAttention

kwargs = dict(default_kwargs)

kwargs['hidden_cls'] = nlp.layers.TransformerScaffold

kwargs['hidden_cfg'] = hidden_cfg

encoder = nlp.networks.EncoderScaffold(**kwargs)

classifier_model = build_classifier(encoder)

# ... Train the model ...

predict(classifier_model)

# Assert that the variable `pre_softmax_weight` from TalkingHeadsAttention exists.

assert 'pre_softmax_weight' in ''.join([x.name for x in classifier_model.trainable_weights])

tf.Tensor( [[-0.20591784 0.09203205] [-0.0056177 -0.10278902] [-0.21681327 -0.12282 ]], shape=(3, 2), dtype=float32)

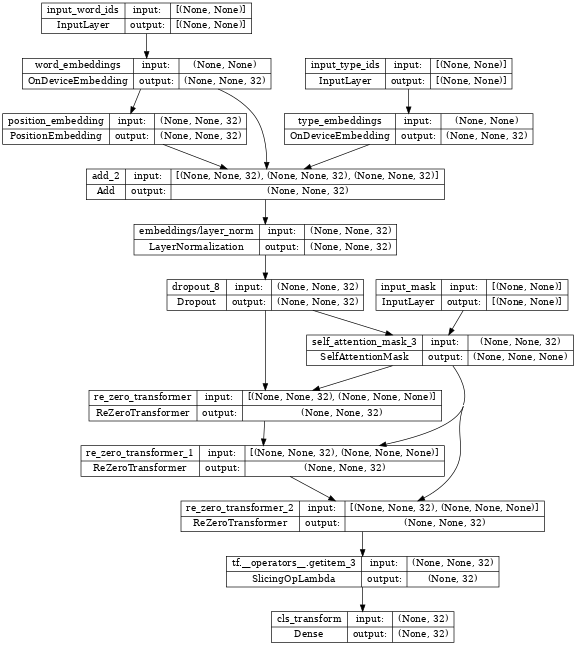

tf.keras.utils.plot_model(encoder_with_rezero_transformer, show_shapes=True, dpi=48)

自定义前馈层

类似地,您也可以自定义前馈层。

请参阅 nlp.layers.GatedFeedforward 的源代码,了解如何实现自定义前馈层。

以下是一个使用 nlp.layers.GatedFeedforward 的示例。

# Use GatedFeedforward

hidden_cfg = dict(default_hidden_cfg)

hidden_cfg['feedforward_cls'] = nlp.layers.GatedFeedforward

kwargs = dict(default_kwargs)

kwargs['hidden_cls'] = nlp.layers.TransformerScaffold

kwargs['hidden_cfg'] = hidden_cfg

encoder_with_gated_feedforward = nlp.networks.EncoderScaffold(**kwargs)

classifier_model = build_classifier(encoder_with_gated_feedforward)

# ... Train the model ...

predict(classifier_model)

# Assert that the variable `gate` from GatedFeedforward exists.

assert 'gate' in ''.join([x.name for x in classifier_model.trainable_weights])

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/src/initializers/initializers.py:120: UserWarning: The initializer TruncatedNormal is unseeded and being called multiple times, which will return identical values each time (even if the initializer is unseeded). Please update your code to provide a seed to the initializer, or avoid using the same initializer instance more than once. warnings.warn( tf.Tensor( [[-0.10270456 -0.10999684] [-0.03512481 0.15430304] [-0.23601504 -0.18162844]], shape=(3, 2), dtype=float32)

构建一个新的编码器

最后,您还可以使用模型库中的构建块构建一个新的编码器。

请参阅 nlp.networks.AlbertEncoder 的源代码 作为示例。

以下是一个使用 nlp.networks.AlbertEncoder 的示例。

albert_encoder = nlp.networks.AlbertEncoder(**cfg)

classifier_model = build_classifier(albert_encoder)

# ... Train the model ...

predict(classifier_model)

tf.Tensor( [[-0.00369881 -0.2540995 ] [ 0.1235221 -0.2959229 ] [-0.08698564 -0.17653546]], shape=(3, 2), dtype=float32)

检查 albert_encoder,我们看到它多次堆叠相同的 Transformer 层(注意下面“Transformer”块的循环回溯)。

tf.keras.utils.plot_model(albert_encoder, show_shapes=True, dpi=48)